When buying a server/white box for high-performance applications such as SDN and NFV, it is essential to know what features to look for in terms of network acceleration and hardware offload.

Also, in high compact/high network traffic areas such as edge data centers, these hardware acceleration technologies can help bring efficiency and CAPEX/OPEX savings.

There are many, but a few are very popular, as described below.

Non-Uniform Memory Access- NUMA

NUMA relates to how a processor access a memory module is essential, and it is better than the traditional way, called Uniform Memory Access (UMA).

How?

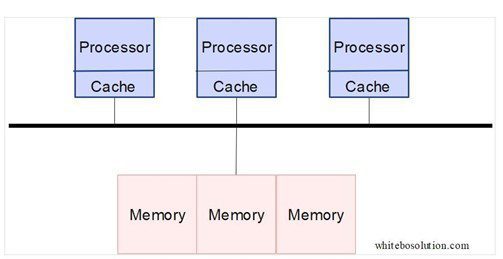

In UMA, as shown below, multiple processors access memory modules through a common bus.

Fig: Uniform Memory Access (UMA)

Fig: Uniform Memory Access (UMA)

This means that each processor has access to memory modules through a common shared bus. The access time to memory for each processor will be the same irrespective of which memory module it uses.

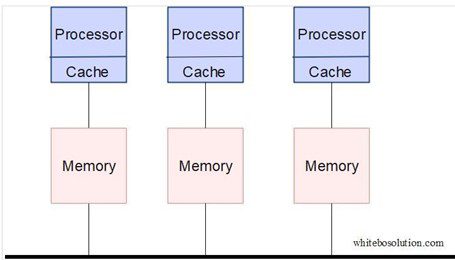

On the other hand, servers with NUMA have an efficient and faster way of accessing memory.

As shown below, in NUMA, each processor has access to its local memory module, which it can access directly, which is faster.

It also has access to a shared bus to use a memory module connected to another processor.

Fig: Non-Uniform Memory Access ( NUMA)

Fig: Non-Uniform Memory Access ( NUMA)

Here is a catch, if data resides in the memory directly connected to the processor, the access time is fast. Still, if the data resides in the memory connected to another processor, the access time is slower.

However, when the average access time to access the memory is calculated, NUMA always wins against UMA, thus a highly desirable feature.

Single Root I/O Virtualization (SR-IOV)

SR-IoV is an important feature that enables a server to transport data with high throughput. Thanks to enhancements in the PCI Express ( PCIe )functionality, PCIe can be virtualized today, as do servers. PCIe enables server peripherals to communicate through the shared bus.

By logically partitioning PCIe, it is possible to convert a physical function (PF), such as NIC, into multiple virtual functions (VFs). These multiple VFs are connected to a shared NIC, and each VF can then be assigned to a VM.

To appreciate the advantages of SR-IoV, let’s go through the traditional data transfer in a server.

Normally all the communication in a server with multiple VMs happens through OVS ( Open Virtual Switch), but it does not need to be that way.

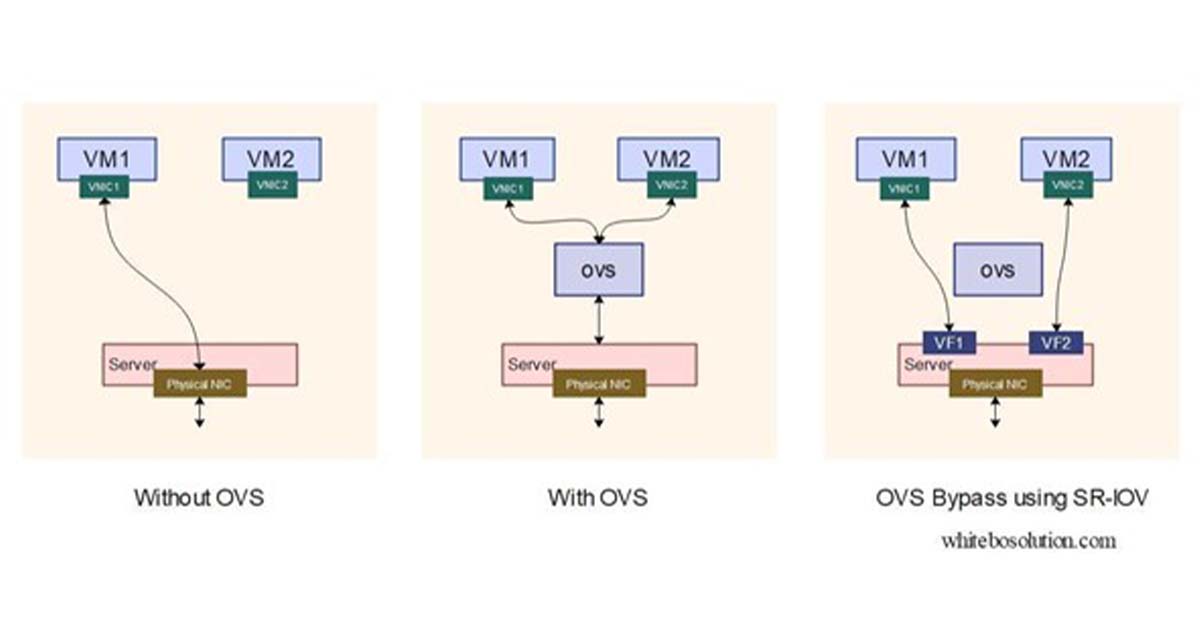

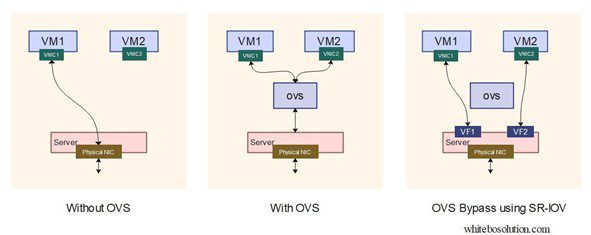

In the diagram below, the case without OVS shows that one of the VM communicates with the outside world through the server’s physical NIC.

However, did you notice the limitation?

Only one VM can communicate as the physical NIC is dedicated to it. The result is that if we introduce a second VM (VM2), it cannot communicate with the outside world. Therefore, this is not a viable option for server virtualization.

What happens when we introduce OVS, as in case 2 below?

Thanks to OVS layer 2 functionality, it can partition the traffic on layer 2 and use the same physical NIC for the two VMs to communicate simultaneously.

So far, so good.

But what happens is that the performance of the data throughputs is highly dependent on the OVS, which is part of the hypervisor and consumes the CPU resources.

A better and more efficient way is to bypass the OVS/hypervisor altogether, as shown in case 3 below.

Bypassing OVS can only happen if we can partition NICs directly and logically. SR-IoV can achieve precisely that, and SR-IoV achieves that through the logical partitioning of PCIe.

In the example below, SR-IoV creates two virtual functions (VFs), and each virtual function (VF) is dedicated to each VM bypassing the OVS completely, resulting in higher throughput ( almost line throughput) and not affected by CPU utilization.

Fig: Traditional vs. SR-IoV

Fig: Traditional vs. SR-IoV

Intel QuickAssist Technology ( QAT)

Intel QAT is a technology developed by Intel and works on Intel architecture. With QAT, multiple processor-intensive jobs related to encryption and compression are offloaded to QAT accelerators chipsets, thus saving processor resources and speeding up the encryption/compression process.

Overall they are more efficient than general-purpose CPUs in terms of cost and power.

SmartNIC

SmartNICs are Network Interface Cards (NICs) that feature integrated accelerators. They optimize latency, encryption, and packet loss and perform load balancing and traffic monitoring functions.

SmartNICs can fulfill networking needs and as storage controllers for SSDs and HDDs. It also has the benefit of offloading security and storage-related tasks from the processor, allowing for increased computing power.

The smartNIC consolidates networking, storage, and security functions into one component, which reduces inventory and CAPEX investment.

In an environment where increased integration and intelligence of servers is desirable, incorporating smartNICs into servers makes sense.

Network accelerators & hardware offload matter a lot on the Edge.

When space and power are constrained in edge DCs, an intelligent server architecture based on hardware offload/network acceleration can result in CAPEX/OPEX savings Thanks to technologies such as NUMA, QAT, SR-IoV, and SmartNIC, the edge servers by vendors such as Lanner are innovative, integrated, power-friendly, and ideal for edge DC applications.

Edge acceleration in Lanner’s Products

Lanner, a leading provider of white box solutions, has smart, innovative, and cutting-edge platforms for deployment in edge and core applications.

Lanner’s white box platform HTCA ( HTCA-6600, HTCA-6400, HTCA-6200 ) is an all-in-one MEC/Edge platform that comes in various form factors from 2U to 4U suitable for edge data centers that are limited in space and power. The platform includes various compute blades and switchblades based on programmable data planes such as Intel Tofino ASIC (HLM-1101).

HTCA offers acceleration technologies such as NUMA, Intel QAT, and SR-IoV

- The intel Tofino IFP has dual dedicated ethernet to each NUMA node

- The HTCA server blades have dual QuckAssist accelerators, one for each NUMA node

- It has intel e810 ethernet adapters that support SR-IoV for network acceleration

To meet the demands of cloud computing and virtualized networks, Virtual Extensible LAN (VXLAN) can create virtual networks that transmit data over IP infrastructure. Scalability in software-defined networking (SDN)/network functions virtualization (NFV) deployments can be achieved with a SmartNIC device which can take on VXLAN-related tasks, thus increasing CPU efficiency. The Lanner FX-3230 server appliance integrated with Ethernity Networks’ ACE-NIC SmartNIC through PCI Express is a potential solution for this problem.

Lanner operates in the US through its subsidiary Whitebox Solutions – www.whiteboxsolution.com